Some time ago, I saw a friend's mobile hard disk hanging up and years of data destroyed. Therefore, I started to develop a NAS idea and do a good job of data backup. The basic design requirement is to support 3-4 hard disks and support for raid. The original text was sent on the non-famous Wumao Forum (you need to see the account login. You will not see it anyway):

After a period of operation and system adjustment, NAS is operating more normally, and lessons learned and lessons learned will be issued.

First, platform selectionNowadays, from the choice of platform, Synology is undoubtedly the most convenient and worry-free. After all, it is a professionally designed NAS platform. However, the first group Hui is not cost-effective, such as dual-disk ds215 but also about 1400, but the hardware is undoubtedly very rubbish, cpu or marvel's arm processor. The second is that the group's system cannot be customized. After all, it is also sacrificed for convenience. So the first time the group Hui out.

The second option is the hp micro server, which is also an excellent solution. The configuration of the lowest-end Celeron processor is around 2000, and it can support four positions. Compared with Synology, it can be said to be inexpensive. It is also guaranteed that the whole machine will do its work for the manufacturers. However, at the time, it was too one-sided to pursue low-power consumption and there was still money saved. Finally, this option was not selected.

The third is to assemble it. Relatively frustrating, the system may have compatibility issues, poor stability and so on. The advantage is that there can be products that meet their needs, as well as price advantages. Aunt Zhang had previously had an article of her own tossing and assembling nas. I also received a lot of gestures in it, and I thanked the author. Just intel now launched a new generation of braswell low-power processors that can run without fans. The final price and no-sound mute play a decisive factor, choose their own match.

Second, hardware

The first is the cpu. If you simply store and download, there will not be much demand for cpu performance. In this case, you can pursue low-power mute. But if you need video playback, you still need better performance.

Cpu is finally j3160, as the braswell platform is second only to the Pentium j3700 product, the price is probably cheaper than 100, tdp less 0.5w, at the expense of the point Turbo and graphics performance, but it does not matter. Finally, this 14nm process processor tdp only 6w, and even at the same level with the high-end mobile processor. Qualcomm's 810 would cry in the toilet. The nominal power consumption of a typical mobile phone processor is similar to the intel's sdp, the scene power consumption concept. In fact, full-load operation is much larger than the nominal value. The TDP is the so-called thermal design power consumption, which is equivalent to the maximum heat the processor can generate. . Therefore, the j3160 is running under normal conditions. The CPU usage is not high and the power consumption is even lower.

Choosing the braswell platform to do nas is not perfect. Personally, there are two biggest disadvantages: the braswell platform only supports two sata ports and does not support ECC check. In the case of NAS, more SATA ports are available, and ECC memory is also meaningful for stable file systems. Another one or two hundred more expensive than the previous generation J1900 platform. But buying new ones does not buy the old ones, and the increase in performance and power consumption is real.

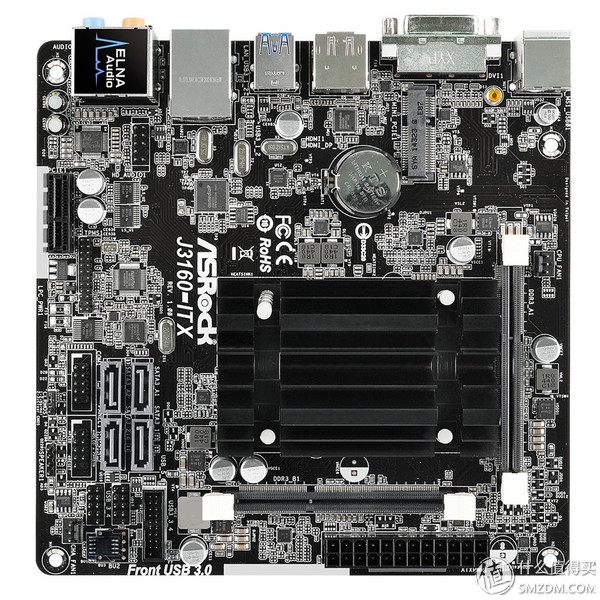

This processor is embedded in the motherboard, buy motherboard to send cpu. ASRock’s j3160-itx is selected for the mainboard, and 500 oceans are required to buy at Mayun’s home.

The biggest advantage of this is that the on-board chip has expanded two sata ports, a total of four sata ports, eased the j3160 itself only supports two sata's flaws. Radiator size is also larger. As can be seen from the figure below, due to the low power consumption of the platform, passive heat dissipation can meet the demand, eliminating the cpu fan and freeing up space for the chassis. One of the bad things about this motherboard is that it does not have an onboard SSD interface like msata, resulting in a sata port that would take over the SSD. But no matter what, the current j3160 should have no better board selection.

After the cpu said memory, the dog east on a low-voltage DDR3 Kingston 8G. Because it will be mentioned later that the zfs group soft raid, zfs minimum need memory 4G, 8G is better to use insurance, the price is more than 100 budget. Also note that while most of these low-power motherboards claim to support both standard and low-voltage memory, low-voltage memory compatibility seems to be better.

Then there is the system disk. It may be said that nas is not equipped with a hard disk, installed directly on the storage hard disk. It is true that the system can be installed on a storage hard disk, but it is cumbersome to manage it. If the storage is made raid and then the raid array is hung, rebuilding may be more troublesome than on a separate hard disk. And mechanical hard disk read and write is poor, put system performance is also anxious. So it's best to get a separate SSD drive system. If you want to save money, you can also use the u disk, but even if the U disk read performance over, write performance is not OK, continuous read and write performance is not as mechanical hard disk. U disk rewritable may also be lower than the SSD. Finally, I chose a 32G Kingston garbage ssd, the most important to save money, other enough is enough. Because NAS's system intends to use ubuntu, the reason explained later is that 32G is enough to do system disk, and even 16G is enough.

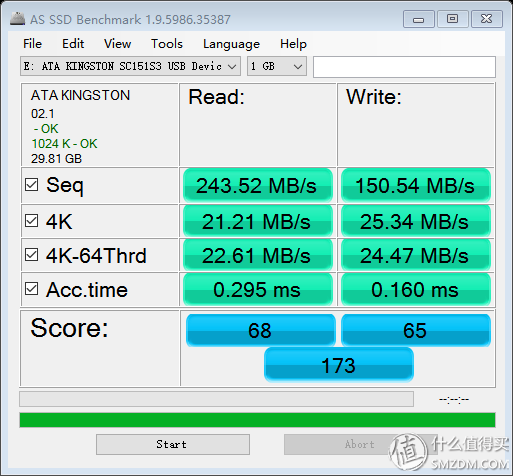

(to Ma Yun home steals map)

Buy back to run a minute, then run on the usb3.0 mobile hard disk box. You can see that the running points are indeed rubbish, and there is one 85 yuan on a certain treasure. Anyway, this price should not be expected to have much better performance. However, it is better than mechanical hard drives, especially random read/write capability, which is a magnitude difference. In addition, some people may worry about how this low-priced disk is broken. In the final analysis, it is used to install the system, not to store data. If it is broken, it will be broken. It is just a matter of buying a new reload system, and it is not necessary to care too much about stability.

The choice of the chassis is actually very tangled. On the one hand, it is hoped that the volume will be small and good, on the other hand, it is hoped that the internal space will be large enough, especially if it can plug in a lot of hard disks. Later found an artifact, Liren L04 hard drive bracket. You can stack multiple hard drives this way. The bracket can be used not only for the cabinet of the legislator, but it is enough for the inside of the chassis to contain a lot of hard disks. That is, a pile that is too high may have an effect on strength. In this way, you can use an ordinary itx chassis without having to buy a dedicated NAS chassis. The price can be a lot cheaper. However, the internal space of a specialized NAS chassis is still more compact, and it can plug more mobile hard disks into smaller volumes.

Chassis silk Bo c2, space than v2, but the thermal design is not good.

At that time, wishful thinking was to replace the atx standard power supply of the power supply with a small power supply. By emptying the space, the hard drive that was installed at the bottom of the box could have been growing up. This way down, using the C2 chassis with 4, 5 3.5-inch hard drives are not a problem.

However, the actual power supply option still made mistakes. Because of the pursuit of no fans, Taobao bought a small atx mini power supply. 199 oceans required

(AC-ATX-120 mini fanless power supply)

This power supply honestly said that the work is quite solid, and those inside the capacitor are also enough to stack. The problem is that the total length is 16 cm, which is longer than the standard itx14 cm. The c2 happens to have a baffle, so it cannot be installed in its original position. No way but to punch holes in the top of the chassis and hang it on the top of the chassis. Fortunately, this power is not heavy. The aluminum alloy used in the silk is also very soft, and drilling is not too difficult. The original chassis power supply location on a treasure to buy atx power baffle cover, and spent 40 oceans.

The problem with this power supply is that the power is too small, the total peak power is only 120W, and the 12V output is only 60W. If you have more hard drives, the load may not be enough. Even if you are connected to three hard drives now, the instantaneous power of the hard disk boot may be exceeded. After all, there is no small fan power supply. If you want to pursue the pursuit of a fan-free, it is recommended to choose a DC-ITX power point, a laptop transformer. The DC-ITX power supply is also smaller and better positioned inside the chassis. The shortcomings cost money, DC-ITX power supply + notebook transformers are several hundred, and all are Zhai.

The last is the hard disk. My routine is which unit price is the cheapest. Finally, Seagate's 5t 5900 warehousing warehouse, less than 900, bought two. In addition, he originally had a 7200 to 3T Seagate also removed and installed. The lack of performance and reliability depends on raid. The serial number was found to be strong, but the warranty was only 17 years old and two years warranty was required. I top you lungs, this profiteer.

Install the motherboard and hang up the power supply. The middle of the horizontal back panel hangs 2.5 of the 32G solid state and the original 3.5-inch 3T hard drive. The original power supply had only one sata power interface, and bought several large D ports one to two power lines, expanding out of the four sata power ports. Fortunately, these 10 dollars to get it. Successfully booted successfully after assembly.

You can see that there are no problems with the two-tier bracket inside the chassis, as long as you have a good line. With these two sets of brackets, up to 4-5 hard disks can be placed, plus one on the backplane. It is possible to plug 5 hard disks in this chassis. . However, the cabling may be troublesome. It also requires the expansion card to be expanded into two slots in the mini-pcie slot. The PCI-E port is blocked, and you don't have to think about it. This small chassis, my hands and remnants, the line is a nightmare.

This set of down a single hard drive took 1800, other only about 1300, a total of 3100 this way. Single said that the price of the machine and Qunhui double disk DS215 almost, performance is much better. The price of the hard disk is still really tm expensive, down with evil traditional hard disk profiteers!

It may be easy to use for easy entry, Qunhui or match black group Hui is a good choice for many people.

But for users with a certain linux base, I think that installing a linux distribution is undoubtedly a better choice. Functions such as remote download and network sharing of NAS requirements can be implemented through corresponding software such as transmission and samba. In fact, Synology can also be seen as a custom Linux. And he toss linux expansion, playability is much better.

So the following content is suitable for a certain linux-based friends, I actually own the technology in general, will not directly google search first is why choose ubuntu this distribution. In fact, personal and too much like ubuntu, the previous experience is often met with some inexplicable problems, the community is not very good, more tossing gentoo in the past.

But this time ubuntu 16.04 server version, the first official zfs included in the kernel, so you do not have to toss the configuration zfs this file system support. And just met the three-year long-term support version. It may be more convenient for other distributions to support zfs, but they are too lazy to toss. Say j3160 this type of performance is not suitable for gentoo this kind of source code to compile the distribution.

What is the zfs file system? The simple word is that this file system may be the most advanced currently. For example, you can see this link

Zfs can be easily implemented with various soft raid configurations, and even supports online reconstruction of raid arrays.

Here I use zfs simply to make two hard disks raid1, in fact, a command: zpool create mypool mirror /dev/sdb /dev/sdc

The performance of the actual test has also gone, with dd command to see more than 150M+ write speed, taking into account that I use two weak chicken storage disk is actually not bad. This soft raid does not require a separate raid card, nor does it have support from the motherboard. It can be said to be quite convenient.

For more worrying about a hard disk hanging up lost data, you can use raid1, but only half of the available capacity. Being confident in character can use raid0, but as long as one of the two pieces hangs up, the data is fully linked. Or try raid5, mind the middle state.

Regardless, the configuration of these different raids is easily implemented using zfs. And zfs itself with data validation, the probability of the problem is even smaller than the hard raid may be. The zfs group raid5 can also avoid the write hole problem, but the best memory or ecc, zfs also rely heavily on memory to do the cache, so the memory is also bigger. The minimum requirement is 4G, and the rule of thumb is that 1T hard disk capacity corresponds to 1G of memory. . . However, in fact, the cache can also be set for reading and writing in 5 seconds. For example, the entire array can read or write at 500 M/s, and the cache can be set to 5005=2500 M.

Therefore, it is really a big user of memory, but it is a file system designed for servers.

After a good group, it wasn't long before the actual experience of a zfs raid array was rebuilt online. Because later tossing a 5t hard disk connected to the motherboard port and 3t hard drive mixed, resulting in the corresponding device on the system file name changes. It turned out that /dev/sdc was changed to /dev/sdd, and the array was built with the device file name. So another hard drive is dropped. In this case, the array is still working, but it becomes a single hard disk degrade state. I did not find out when transferring data. When it was later discovered that the data was copied several hundred G in the past, the data on the two hard disks were inconsistent. The array is reconfigured and the device number is changed. The array is automatically rebuilt. At the same time, it can also continue data operations on the array and continue to copy the data without affecting the reconstruction, just as it is still working.

Tossing after running a point, using the UnixBench, after all, is loaded with linux, windows are common under those running sub-software is a drop. Anyway, I know how many people can understand the result.

-------------------------------------------------- ----------------------

Benchmark Run: Mon Aug 08 2016 22:30:38 - 22:58:35

4 CPUs in system; running 1 parallel copy of tests

Dhrystone 2 using register variables.9 lps (10.0 s, 7 samples)

Double-Precision Whetstone 1964.3 MWIPS (10.1 s, 7 samples)

Execl Throughput 924.6 lps (29.5 s, 2 samples)

File Copy 1024 bufsize 2000 maxblocks 390102.5 KBps (30.0 s, 2 samples)

File Copy 256 bufsize 500 maxblocks 112852.9 KBps (30.0 s, 2 samples)

File Copy 4096 bufsize 8000 maxblocks 819176.8 KBps (30.0 s, 2 samples)

Pipe Throughput 900138.5 lps (10.0 s, 7 samples)

Pipe-based Context Switching 53472.1 lps (10.0 s, 7 samples)

Process Creation 1831.5 lps (30.0 s, 2 samples)

Shell Scripts (1 concurrent) 3505.5 lpm (60.0 s, 2 samples)

Shell Scripts (8 concurrent) 1289.1 lpm (60.0 s, 2 samples)

System Call Overhead 1603508.8 lps (10.0 s, 7 samples)

System Benchmarks Index Values ​​BASELINE RESULT INDEX

Dhrystone 2 using register variables 116700.0.9 1039.8

Double-Precision Whetstone 55.0 1964.3 357.2

Execl Throughput 43.0 924.6 215.0

File Copy 1024 bufsize 2000 maxblocks 3960.0 390102.5 985.1

File Copy 256 bufsize 500 maxblocks 1655.0 112852.9 681.9

File Copy 4096 bufsize 8000 maxblocks 5800.0 819176.8 1412.4

Pipe Throughput 12440.0 900138.5 723.6

Pipe-based Context Switching 4000.0 53472.1 133.7

Process Creation 126.0 1831.5 145.4

Shell Scripts (1 concurrent) 42.4 3505.5 826.8

Shell Scripts (8 concurrent) 6.0 1289.1 2148.5

System Call Overhead 15000.0 1603508.8 1069.0

========

System Benchmarks Index Score 596.3

-------------------------------------------------- ----------------------

Benchmark Run: Mon Aug 08 2016 22:58:35 - 23:26:34

4 CPUs in system; running 4 parallel copies of tests

Dhrystone 2 using register variables.7 lps (10.0 s, 7 samples)

Double-Precision Whetstone 7853.5 MWIPS (10.1 s, 7 samples)

Execl Throughput 5003.0 lps (29.9 s, 2 samples)

File Copy 1024 bufsize 2000 maxblocks 418688.0 KBps (30.0 s, 2 samples)

File Copy 256 bufsize 500 maxblocks 125715.4 KBps (30.0 s, 2 samples)

File Copy 4096 bufsize 8000 maxblocks 1015028.9 KBps (30.0 s, 2 samples)

Pipe Throughput 3602730.8 lps (10.0 s, 7 samples)

Pipe-based Context Switching 419679.0 lps (10.0 s, 7 samples)

Process Creation 17943.8 lps (30.0 s, 2 samples)

Shell Scripts (1 concurrent) 11184.5 lpm (60.0 s, 2 samples)

Shell Scripts (8 concurrent) 1455.2 lpm (60.1 s, 2 samples)

System Call Overhead 4594957.5 lps (10.0 s, 7 samples)

System Benchmarks Index Values ​​BASELINE RESULT INDEX

Dhrystone 2 using register variables 116700.0.7 4157.4

Double-Precision Whetstone 55.0 7853.5 1427.9

Execl Throughput 43.0 5003.0 1163.5

File Copy 1024 bufsize 2000 maxblocks 3960.0 418688.0 1057.3

File Copy 256 bufsize 500 maxblocks 1655.0 125715.4 759.6

File Copy 4096 bufsize 8000 maxblocks 5800.0 1015028.9 1750.0

Pipe Throughput 12440.0 3602730.8 2896.1

Pipe-based Context Switching 4000.0 419679.0 1049.2

Process Creation 126.0 17943.8 1424.1

Shell Scripts (1 concurrent) 42.4 11184.5 2637.8

Shell Scripts (8 concurrent) 6.0 1455.2 2425.4

System Call Overhead 15000.0 4594957.5 3063.3

========

System Benchmarks Index Score 1749.7

The single-core total score is 596.3, and 4 cores are 1749.7. The single-core running is probably at the low-cost virtual host level on the Internet. After all, low-power platforms can't require much. Compared with the dual-core Celeron 1037u, single-core only has more than half of it, relying on the core of most quad-core performance is finally more than dual-core. The benefits of competition Yang 1037u not fan. During the sub-core run, the temperature is highest at more than 50 in a single core, 4 cores are not fully open to 70 degrees, and Turbo can go to about 2.2G. Relying on passive cooling to have this level is extremely valuable.

Fourth, the last point of the temperature problem

As mentioned earlier, the thermal design of the chassis of the c2 is very problematic. The original bottom can be equipped with a 12cm fan and a hard disk. The whole machine is directly a fanless system.

One of the original pursuits was to achieve fanless silence. However, in actual operation, it was found that the 3.5-inch hard disk rotation sounds more moving, probably because there is no fan noise, which is particularly prominent. The temperature is more than cpu, feels exceptionally hot. The heat at the power supply can not be underestimated, even higher than the hard disk. The last cpu may be the lowest temperature

Did this fail, of course, I absolutely do not want to be here. After abandoning the water cooling program, careful analysis of the nas runtime situation, in fact, most of the time do not need the hard drive in operation, and the linked bt because it is campus network ipv6 can also be finished quickly. Then set the hard drive to sleep well

Hard disk hibernation under Linux The hdparm -B parameter sets the advanced power managemant value of the hard disk. Between 0-127 is allowed to sleep, 128-254 is not sleeping, the larger the value, the greater the relative power consumption, but the performance is high. The original equivalent is set at 254, so the hard drive has been running at full speed, causing the temperature to remain high for a long time. In view of this, set this value to 127, in order to be effective after the restart, you also need to modify the /etc/hdparm.conf configuration file.

The end result is that the hard disk is in the dormant state most of the time and will occasionally run up when it is used. The temperature is also around 40 degrees, and the power supply is not hot. The only thing to say is to increase the number of head restarts, the so-called Load_Cycle_Count. About two to three days will increase 100 times, taking into account the number of heads restart life is generally 60W times, probably before the hard disk will not run out of this run out.