In commercial software, computer chips have been forgotten. For commercial applications, this is a commodity. Because robot technology is more closely linked to personal hardware devices, manufacturing applications are still more focused on the hardware part.

Since the 1970s, on the whole, the status of artificial intelligence (AI), and specifically in the field of deep learning (DL), the relationship between hardware and software is more closely connected than ever. And my recent articles on "management AI" are related to overfitting and prejudice, two major risks in machine learning (ML) systems. This column will delve into the hardware acronyms that many managers, especially line of business managers, may deal with. These acronyms are constantly mentioned in machine learning systems: Graphics Processing Unit (GPU) and on-site Programmable gate array (Field Programmable Gate Array, FPGA).

This helps understand the value of GPUs, because GPUs accelerate the tensor processing required by deep learning applications. FPGAs are interested in finding ways to study new AI algorithms, training these systems, and starting to deploy low-volume custom systems that are now being studied in many industrial AI applications. Although this is a discussion about the ability of FPGA to train, I think the early use is derived from the use of F, field.

For example, training an inference engine (the core of machine learning "machines") may require gigabytes, or even terabytes of data. When running inference in a data center, the computer must manage a potentially increasing number of concurrent user requests. In edge applications, whether in a drone used to inspect pipelines or in a smartphone, the device must be small and still effective, and yet adaptable. Simply put, a CPU and a GPU are two devices, and an FPGA can have different blocks to do different things, and it is possible to provide a robust chip system. Given all these different requirements, it is best to understand the current state of the system architecture that can support different requirements.

There are two main types of chip designs that can drive current ML systems, GPUs and FPGAs. In the middle of the future (at least a few years), there are also hints of new technologies that may become game changers. Let's see.

Graphics Processing Unit (GPU)

The largest chip in the machine learning world is the graphics processing unit GPU. This is mainly used for computer games, how does it make things look better on computer monitors become crucial to machine learning? To understand this, we must return to the software layer.

The current champion of machine learning is the Deep Learning (DL) system. The DL system is based on various algorithms, including deep neural networks (DNN), convolutional neural networks (CNN), recurrent neural networks (RNN), and many other variants. The keyword you see in these three terms is "network". The algorithm is a variation of a theme. The theme is several layers of nodes, and there are different types of communication between nodes and layers.

What is being processed is multiple arrays or matrices. Matrix (matrix) Another more precise term is tensor (tensor), so it is used throughout the machine learning industry such as TensorFlow.

Now back to your computer screen. You can think of it as a matrix of pixels or dots in rows and columns. This is a two-dimensional matrix or tensor. When you add color, the bit size added to each pixel, and want a consistent image that changes rapidly, the calculation can quickly become complicated and take up cycles in the step-by-step CPU. The GPU has its own memory, which can save the entire graphic image as a matrix. You can then use tensor math to calculate the changes in the image, and then only change the affected pixels on the screen. This process is much faster than redrawing the entire screen every time the image is changed.

NVIDIA was founded in 1993 to create a chip to solve matrix problems that general computers such as CPUs cannot solve. This is the birth of the GPU.

Matrix operations don't care what the final product is, they just deal with elements. This is a slight oversimplification, because different operations work differently depending on the sparse matrix (when there are many zeros) and the dense matrix, but the content does not change the operation. When deep learning theory scholars saw the development of GPUs, they quickly adopted it to accelerate tensor operations.

GPUs are essential to the development of machine learning, and promote the training and reasoning of data centers. For example, NVIDIA Volta V100 Tensor Core continues to accelerate in terms of its basic architecture and ability to run inference with lower accuracy (this will be another topic, meaning fewer bits, which means faster processing). However, there are other issues to consider when it comes to the Internet of Things.

Field Programmable Gate Array (FPGA)

In the field, all types of applications have different needs. There are many different application areas, vehicles, pipelines, robots, etc. Different industries can design different chips for each type of application, but this can be very expensive and destroy the company's return on investment. It may also delay the time to market and miss important business opportunities. This is especially true for highly personalized needs that do not provide sufficient economies of scale in the market.

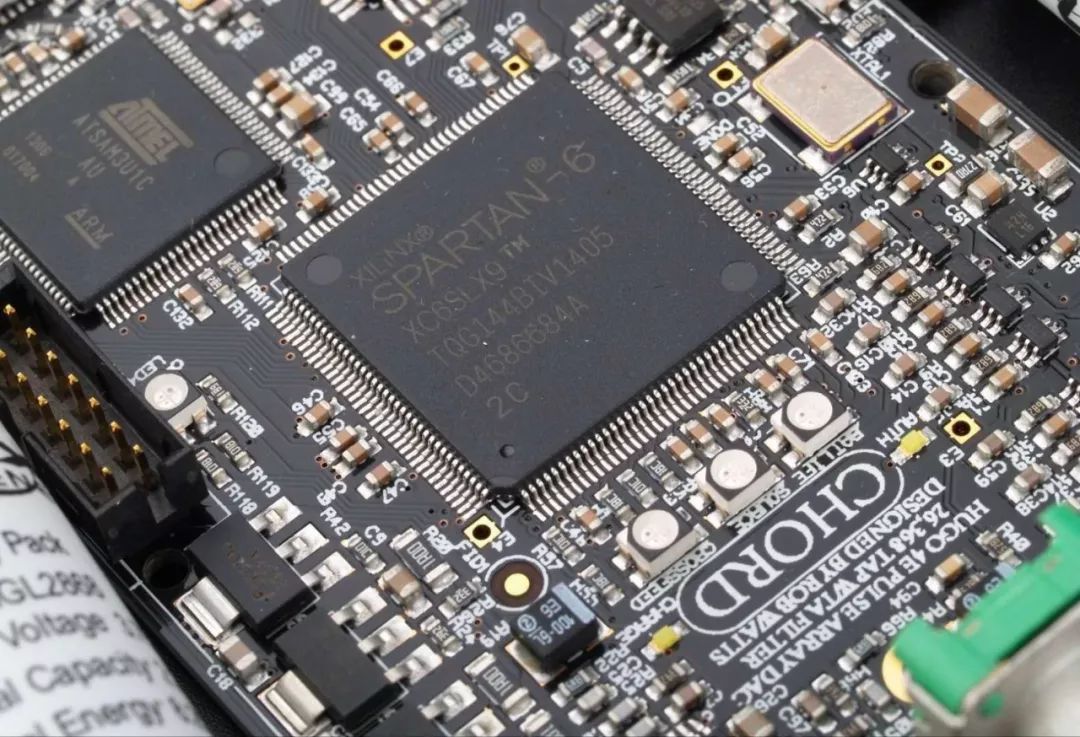

FPGAs are chips that help companies and researchers solve problems. FPGA is an integrated circuit that can be programmed for multiple uses. It has a series of "programmable logic blocks" and a method of programming blocks and relationships between blocks. It is a universal tool that can be customized for multiple uses. Major suppliers include Xilinx (Xinlinx) and National Instruments (National Instruments).

It is worth noting that the low cost of chip design does not make FPGA a low-cost option. They are usually most suitable for research or industrial applications. The complexity of the circuit and design make it programmable and not suitable for low-cost consumer applications.

Because FPGAs can be reprogrammed, this makes them valuable for the emerging field of machine learning. Constantly add algorithms, and fine-tune different algorithms by reprogramming blocks. In addition, low-precision inferred low-power FPGAs are a good combination for remote sensors. Although the inventors refer to "field" more as "customer", the real advantage of FPGAs in implementing AI applications is in the real world. Whether it is for infrastructure such as factories, roads, and pipelines, or for remote detection of drones, FPGAs allow system designers the flexibility to use a piece of hardware for multiple purposes, resulting in simpler physical designs that can be more easily Perform field applications.

New architecture is coming

GPUs and FPGAs are technologies that are currently helping to solve the challenge of expanding the impact of machine learning on many markets. What they do is to make more people pay attention to the development of this industry, and try to create a new architecture to apply in time.

On the one hand, many companies try to learn the lessons of tensor computing on the GPU. Hewlett-Packard, IBM and Intel all have projects to develop next-generation tensor computing devices specifically for deep learning. At the same time, startups like Cambricon, Graphcore and Wave Computing are also trying to do the same.

On the other hand, Arm, Intel and other companies are designing architectures to take full advantage of GPUs and CPUs, and to target devices to the machine learning market. It is said that they can do more than centralized tensor calculations. Processing is also more powerful.

Although some of the above organizations focus on data centers and other Internet of Things, it is too early to talk about any of them.

From global companies to start-up companies, a caveat is that, apart from the earliest information, no other information has appeared. If we see the earliest device samples in 2020 at the latest, then it will be a surprise, so they will not be on the market for at least five years.

Universal Vacuum Cleaner Ac Motor

Universal Vacuum Cleaner Ac Motor,Ac Motor Vacuum Cleaner,Ac Motor For Vacuum Cleaner,Mini Vacuum Cleaner Motor

Zhoushan Chenguang Electric Appliance Co., Ltd. , https://www.vacuum-cleaner-motors.com